The Coordination Assessment

by EU DisinfoLab Researcher Antoine Grégoire

See the Second Branch of the CIB Detection Tree here

Disclaimer

This publication has been made in the framework of the WeVerify project. It reflects the views only of the author(s), and the European Commission cannot be held responsible for any use, which may be made of the information contained therein.

Introduction

The coordination of accounts and adoption of techniques to publish, promote and spread false content is being referred to as “Coordinated Inauthentic Behaviour” (CIB). Coordinated Inauthentic Behaviour has never been clearly defined and may vary from a platform to another. Nathaniel Gleicher, Head of Cybersecurity Policy of Facebook explains it as when “groups of pages or people work together to mislead others about who they are or what they are doing”.

The definition moves away from a need to assess whether content is true or false and some activity can be determined as coordinated inauthentic behaviour, even when it contains true or authentic content. However, authentic content without trying to take advantage of how the platform algorithms work is not seen often and is expected to be a very rare case. CIB may involve networks dissimulating the geographical area from where they operate, for ideological reasons or financial motivation.

The examples described as explanations of what CIB is, provide insight into what social network activity might be marked as CIB leading to content and account removal. However, a clear definition of what CIB is for each platform and a wider cross-platform definition are missing, e.g., a clear line of what makes some kind of activity authentic or coordinated and what not. There have been reports of activities that they are found to achieve a consensus interpreting them as CIB, but platforms have responded by explaining that these activities do not comply with the definitions of CIB. This becomes especially important in the context of topics related to politics, such as elections, when platforms undertake a role to decide what is CIB and what is not, based on an unclear and sometimes flexible set of definitions and without providing clear explanations.

Although a clear and widely accepted definition of CIB is currently missing, the concept of account networks with varying ways of interacting with each other is observed as central to any attempt to identify CIB. Responding to the growing need for transparency in such identifications, there are several other efforts from researchers and investigators in exposing CIB. The response is at the crossroads of manual investigation and data science. It needs to be supported with the appropriate and useful tools, taking into account the potential and the capacity of these non-platform based efforts. The approach of the EU Disinfo Lab is to deliver a set of tools that enable and trigger new research and new investigations, enabling the decentralization of research, and supporting democratization.

We also hope this methodology will help researchers and NGOs to populate their investigations and reports with evidence of CIB. This could improve the communication channels between independent researchers and online platforms to provide a wider and more public knowledge of platforms policies and takedowns around CIB and Information Operations.

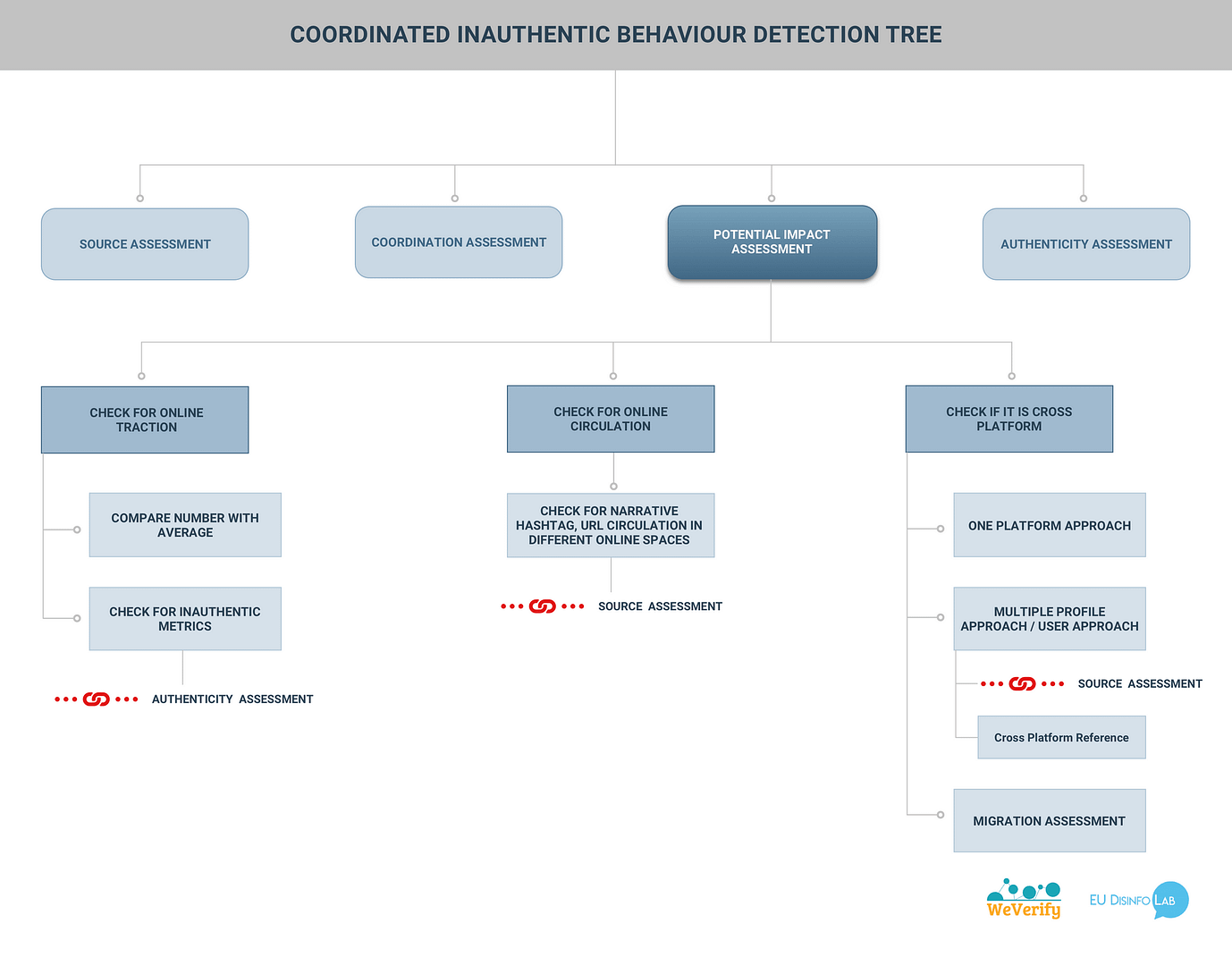

The tools we propose are in the form of a CIB detection tree. It has four main branches that will correspond to a series of four blogpost published in the next months.

Here is the first blogpost: The Coordination Assessment branch. Or how to detect the C in CIB.

The following figure shows the brief overview of the Coordination Assessment branch. (A detailed version of the detection tree to come).

Check for suspicious timestamps

Why and where to look?

Examining the timestamps and time of posting is one of the best indicators to assess automation, or Coordinated Inauthentic Behaviour done by a human artificially pushing for content or copy-pasting the same content, or several accounts coordinated to push a narrative. Suspicious timestamps can appear in various places and, yes, in various times. It can be the posting time, it can be the creation date of multiple accounts, it can be the first post of a series of accounts, or it can be a series of accounts all following the same target at the same time.

Indian Chronicles

In our report Indian Chronicles we examined the times of posting of one of the “journalists” publishing on the website EU Chronicles to assess the reality of its existence. We found that the timestamps of the articles raised suspicion, reading XXhX9. In addition, the examination of the timestamps was showing suspicious activity regarding normal night and day cycle and showed no sleep time.

Series of accounts posting the same content

After the release of the Indian Chronicles report, the EU Disinfo Lab was the target of a CIB campaign on Twitter. (Part of this campaign can be visible here using this save.) Examining the timestamps also allowed us to determine it was CIB. In this case, all the tweets targeting the EU Disinfo Lab posted by various accounts were posted with very little time between them, sometimes less than 3 seconds.

Examining timestamps helps to assess or have a hint if content is artificially pushed if coordination can be suspected by revealing inauthentic time of posting. Reversely suspicious timestamps are also a good proof if one suspects a network engaged in CIB or found other clues. Around 600 accounts were later removed by Twitter after being identified as CIB.

Check for authentic coordinated behaviours

In this branch, we will try to assess if a specific network is engaged in coordinated behaviours, and if coordinated behaviours can be tied to a specific network. The authenticity will be the subject of another blogpost, but some CIB can in fact be organized by real accounts coordinating to manipulate, to harm or to push a narrative. It is also crucial to make the distinction between a CIB campaign and authentic coordination, interactions or social networking that will happen a lot on… social networks.

To reach that goal it is important to conduct appropriate checks, as a rule for any OSINT work, but in this case, to try to match a network with a Coordinated Behaviour.

Identify Networks

Once the network has been identified, one can try to assess if there is coordination among the networks. Assessing the coordination of a network and identify the network coordination must go hand in hand. A “network”, or people grouping on a social network will always be found and identifying a network without assessing coordination can lead to false positive results. Reversely coordination behaviour between people on social network are a normal and very common.

But here, we want to assess if the network is acting in a coordinated manner or if a coordination pattern can be related to a network. If the two criteria are assessed, the result can lead to a CIB.

Assess network via interacting accounts

In the EU Disinfo Lab’s report “The Monetization of Disinformation through Amazon: La Verdadera Izquierda” we used the tool Truthnest to identify a network of accounts, in that case, ran by the same operator. The Truthnest cross-analysis of the accounts were showing, in the “most retweeted account” section, the same series of accounts, showing strong ties between them.

Moreover, by examining these accounts in detail we could observe they were sharing the same Amazon links at the same time. (This criterion is part of a different branch of the CIB tree and will be the subject of a subsequent blogpost.) Thus, we determined it was a coordinated network. The investigation proved it was run by the same operator.

Figure 3 Screenshot of p. 15 of the EU Disinfo Lab report “The Monetization of Disinformation through Amazon: La Verdadera Izquierda” showing identification of the network through identifying most retweeted users via TruthNest

Check if the network is already known or some accounts already appear in previous fact-checking archives

CIB accounts are often re-used or repurposed. Also, the CIB networks can be used for different campaigns. Facebook takedown reports show that sometime PR agencies are involved in CIB and it is likely that the same networks are used for different campaigns. Graphika also found the return of previously identified networks. It is therefore useful to check if a CIB account or network is related to previously known accounts or already fact-checked material. If the reuse or repurposing of a network is identified, this will help for further investigation, identifying the potential source of the CIB campaign and on proving the CIB usage in multiple campaigns.

Identify network through timestamps and similar behaviour

The Stanford Internet Observatory, in its report “Sockpuppets Target Nagorno-Karabakh”, examined the timestamps of the identified networks and suspicious accounts.

Together with the timestamps, Stanford Internet Observatory identified a suspicious Coordinated Behaviour: all four accounts switched from using Twitterfeed to using Twitter Web Client for just these tweets strongly suggests that these accounts were either managed by the same individual or operated in coordination”

Many, if not all behaviours on the web can look like coordination. Thus, it is necessary to identify a series of accounts engaged in these behaviours, only look at the behaviour will lead to false positive. This series of accounts will constitute a network and the network can then be investigated, processed, checked against a previously established database. Assessing the network work together with the examination of coordinated behaviour happening inside the network.

Check for Network Coordinated Behaviour

Identifying the network goes hand in hand with examining coordinated behaviour among the networks. Mainly here we will look for any behaviour adopted by a series of accounts around the same time. If a network has previously been identified, then we must look for any behaviour adopted by the network. If no network has been identified, then the similar behaviour adopted around the same time should trigger the look for a network.

Coordinated behaviour can be the sharing of the same or similar material, the change of profile picture, the renaming of the accounts or adopting repurposing behaviour around the same time (erasing previous post, renaming etc.)

Rappler detecting account repurposing around the same time

Rappler discovered some of the accounts in its investigation changing names and profile picture in a coordinated manner. Accounts of fan pages created to mimic K-Pop idols to gather followers then changed names and profile picture to be repurposed to support a network aimed at supporting a particular narrative. This technique of repurposing sometime used by CIB will be examined further in a forthcoming blogpost on the Authenticity Assessment branch. But here it is possible to assess if this behaviour is happening in a coordinated manner.

Graphika used the date of first posting

Graphika in “The case of inauthentic reposting activists”, used the date of first posting to identify or prove coordination.

From this analysis, we can see that, once a network is identified, criteria like the first post, changing profile picture at the same time, or sharing similar links can be used to assess if there is coordination. However, this coordination assessment must be double-checked with assessing the existence of a network. As we said, Coordination without a network or a network without coordination are the most common things happening on social media and will not necessarily help to prove CIB.

Attempt to assess the intent

Intent can be determined by various means, investigation of a network, suspicion of the aim of a campaign, or suspicion of renewed activity from a network previously identified. Once the intent of a CIB has been determined or suspected, it is possible to track back the intent from the results and effects of a CIB campaign to its origins. By contextualizing the time of activity, by detecting outside calls for coordination, or by tracking back the results and effects of a campaign. If a particular political group is known to be the target of a CIB campaign, then CIB can be detected in the answers, comments or mentions on social networks.

Check for context of suspicious activity

Once a suspicious timestamp, activity, network or coordinated behaviour is identified, it should be checked against a particular context or event.

In a CIB campaign, there can be intent and a purpose behind a campaign. This purpose and intent will appear if suspicious activity around a network is properly contextualized.

The WeVerify tool on the Twitter SNA allows one to focus on a specific period around the activity of a network or a hashtag. The Luca Hammer tool Account analysis has a similar feature.

Identify context period and match with activity

The Stanford Internet Observatory report, “Sockpuppets Target Nagorno-Karabakh”, identified the activity of the network, divided it into three periods

In its conclusion, the Stanford Internet Observatory can determine that “Accounts in this network increased activity around several flash points in the ongoing conflict between Armenia and Azerbaijan over the Nagorno-Karabakh region.”

Contextualization is one of the keys to determine the intent and thus gives strong hypothesis and leads on possible orchestrators for further investigation.

An automated contextualization?

Some platforms have developed ways to interpret CIBs activities following that principle. The crowd sourced review platform Yelp for instance, developed an event alert.

Yelp developed a seemingly extremely useful technique of contextualization and was able to create an event alert, tracking unusual activity “motivated by a recent news event rather than an actual consumer experience.”

With implementing their Unusual Activity Alert, Yelp was able to identify if the reviews of a business were motivated by other goals totally unrelated. Some reviews could be politically motivated like for the “Comet Ping Pong” pizzeria who was at the centre of the “Pizzagate” conspiracy. Some reviews were the results of fan communities coordinating to avenge their idol being ill-treated in a restaurant, or fans reacting to a particular scandal involving their idol and a business. Yelp even identified a virality challenges organized on other platforms

“Most notable reasons we issued an Unusual Activity Alert in 2019: Yelp removed over 2,000 reviews that weren’t based on first-hand experiences in 2019 after numerous businesses made headlines — either deliberately or accidentally — for something related to President Trump and his administration. (…) We removed more than 1,100 reviews after fans took to Yelp to defend their favourite celebrities. (…) Last year YouTubers began challenging one another to film their experiences at the worst-reviewed businesses in their area. This caused Yelp to remove more than 80 reviews that were spurred by this viral challenge.”

Matching unusual activity (or inactivity) with a specific period or context can be the key to detect CIB or understand the motivation behind a campaign.

Check for outside calls for coordination

In some cases, it is possible to look for calls for coordination done outside the platform where the CIB will happen. Rather than trying to identify coordination and then go back to the source, it can be easier to identify the source of coordination before the coordination itself.

Calls for writing fake reviews

For instance, the UK media Which, working on fake reviews, was able to find outside calls for coordination and Facebook groups, sat up to engage in coordinated inauthentic behaviour on another platform.

In this case, some people are organising the monetisation of CIB. So, they needed to reach clients that would go write fake reviews on Amazon in exchange for money or free products. These kinds of coordination can be cross-platform and calls for coordination can happen on one platform to operate on a different platform.

Identifying keywords used to rally

Rappler was also able to identify some groups by identifying the keyword they used to rally people. Such a methodology can be reproduced by looking for generic search terms or more specific keywords used by a network..

Sometimes calls for rallying a CIB campaign can be as open as “Join us to fight this propaganda” to push a narrative or “Amazon UK Reviewers” to manipulate reviews or metrics.

Looking for the keyword “raid”, for instance, on the infamous French Forum “18-25” will give many subforums where Coordinated Inauthentic Behaviour actions are organised to harass a specific target.

Detect Cluster or Groups by tracking back the intent

Reversely it is possible to check for the results and the intent of a suspected CIB and, from there, to trace back the group or cluster of its origin and prove the CIB existence. Let’s consider a series of five-star reviews on a review-based website. From this result, one can suspect the intent is to artificially boost the good reviews of a product. By following the mentions of this product in other platform, one can then find clusters or groups where the coordination was sat up or possible CIB profiles.

In another instance, the EU Disinfo Lab was the target of a CIB campaign aimed against the Indian Chronicle investigation. It is possible to track this intent on social network and investigate the bad mention and reviews of the Indian Chronicle study if CIB profiles can be detected.

Too many positive reviews, a hint of intent to manipulate?

The Which investigation detected CIB networks by identifying products inauthentically getting five-star ratings and looking for these products on other platforms to identify groups where CIB was organised to increase their ratings.

Possible automation for checking intent?

Another tool elaborated to fight inauthentic reviews, Fakespot uses the same methodology but automated, and is capable at detecting inauthentic reviews based on inauthentic ratings.

Intent can be deduced from working on a CIB network or by suspecting the relaunch or reemployment of a campaign. Once the targets, aim or purpose of a CIB have been identified or suspected, it helps to check on the possible results of a CIB campaign to track back the accounts engaged in it. The outside calls for coordination or public recruitment to participate in a campaign are also easier to detect once the intent has been determined.

Check for Similar Content

(part of the next branch: the source assessment)

Eventually, one of the main assets to assess coordination is also to look for the sharing of similar content. This other branch of our CIB detection tree will be detailed in our next blogpost: the source assessment (15 April)

Conclusion

This is the first branch of the CIB detection tree. In the other branches, we will explore other criteria that can be used to assess authenticity for instance or traces of inauthentic language behaviour, research similar narratives or check the posting behaviours.

This tree was elaborated through careful study of the methodology used by non-platform actors to track CIB and the careful study of the takedown reports and anti-CIB policy used by the platforms. As with any tool involving assessment, OSINT techniques or open-source investigation methodology, entering the world of assessing CIB is entering a world of false positive, misleads and second guessing. This tree is designed to assist researchers and non-platform actors in their investigation. It was designed with automation in mind, part of the tasks described here could be automated or designed to assist human investigations. However, this tool was designed bearing in mind that humans are doing the hard work and only humans can eliminate false positives and misleads. Although one only branch is clearly not enough to properly assess CIB and three more are to come, we do believe that even completed with all 4 branches the CIB detection tree will be perfectible. We also believe it will help researchers to populate their report with a language and example that the platforms can speak and understand. Because platforms engaged themselves in removing and acting against CIB, this is why this tree can be used as a detection tool and as a tool for proving CIB in a particular network already under scrutiny.