The Authenticity Assessment

by EU DisinfoLab Researcher Antoine Grégoire

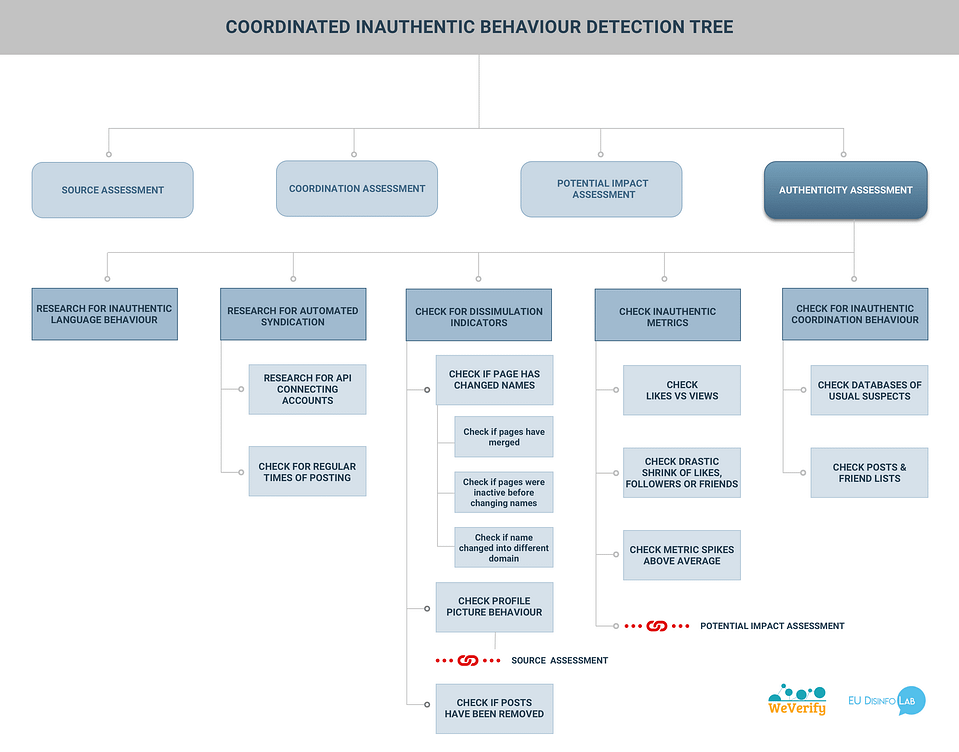

See the First Branch , Second Branch, and Third Branch of the CIB Detection Tree.

Disclaimer

This publication has been made in the framework of the WeVerify project. It reflects the views only of the author(s), and the European Commission cannot be held responsible for any use, which may be made of the information contained therein.

Introduction

The coordination of accounts and the use of techniques to publish, promote and spread false content is commonly referred to as “Coordinated Inauthentic Behaviour” (CIB). Coordinated Inauthentic Behaviour has never been clearly defined and varies from one platform to another. Nathaniel Gleicher, Head of Cybersecurity Policy at Facebook explains it as when “groups of pages or people work together to mislead others about who they are or what they are doing”.[1] This definition moves away from a need to assess whether content is true or false, and whether some activity can be determined as Coordinated Inauthentic Behaviour, even when it contains true or authentic content. CIB may involve networks dissimulating the geographical area from where they operate, for ideological reasons or financial motivation. The examples commonly used to demonstrate cases of CIB, and available through the takedown reports published by the platforms, provide insight into what social network activity might be flagged as CIB leading to content and accounts removal.

What is missing is a clear definition of what CIB signifies for each platform, alongside a wider cross-platform definition, e.g., a clear line of what makes an activity authentic or coordinated and what does not.[2] Even though a consensus is emerging on the notion of CIB, the interpretation may vary from one platform to another or between platforms and researchers. This becomes especially important in some context or topics related to politics, such as elections for instance.

Although a clear and widely accepted definition of CIB is currently missing, the concept of malicious account networks, using varying ways of interacting with each other, is observed as central to any attempt to identify CIB. Responding to the growing need for transparency in such identifications, there are several other efforts from researchers and investigators in exposing CIB. The response is at the crossroads of manual investigation and data science. It needs to be supported with the appropriate and useful tools, considering the potential and the capacity of these non-platform-based efforts.

The approach of the EU DisinfoLab is to deliver a set of tools that enable and trigger new research and new investigations, enabling the decentralisation of research, and supporting democratisation. We also hope this methodology will help researchers and NGOs to populate their investigations and reports with evidence of CIB. This could improve the communication channels between independent researchers and online platforms to provide a wider and more public knowledge of platforms policies and takedowns around CIB and information operations.

The tools we propose are in the form of a CIB detection tree. It has four main branches that correspond to a series of four blogposts that were published in the last months. This article constitutes the fourth blogpost of our series: The Authenticity Assessment branch. The other branches of the CIB tree are the Coordination Assessment[3], the Source Assessment[4] and the Impact Assessment[5].

The following figure shows the brief overview of the Authenticity Assessment.

The Authenticity Assessment branch contains a set of criteria, tools and techniques to quickly assess the authenticity of an account or a content. This branch is one of the most important of the CIB detection tree as the authenticity / inauthenticity, the spam behaviour or the fake accounts constitute a pillar of the platforms’ counter-CIB policy.

However, this branch cannot be disconnected from the other because it only helps to assess the authenticity of the accounts under investigation one by one. It will not address the problem of coordination, which is essential in Coordinated Inauthentic Behaviour.

The inauthenticity of accounts is nonetheless essential because the involvement of “fake accounts” will normally trigger the anti-CIB policy of the platforms, especially Facebook’s. The notion of “fake accounts” is central to Facebook’s definition of CIB:

“CIB is any coordinated network of accounts, Pages and Groups on our platforms that centrally relies on fake accounts to mislead Facebook and people using our services about who is behind the operation and what they are doing.”[6]

For other platforms, the notion of a “fake account” is less central but they promise to sanction spammy behaviour. Twitter for instance, considers that:

“Platform manipulation, including spam and other attempts to undermine the public conversation, is a violation of the Twitter Rules. We take the fight against this type of behavior seriously, and we want people to be able to use Twitter without being bothered by annoying and disruptive spammy Tweets and accounts.”[7]

The assessment of account inauthenticity will thus be a powerful asset for detecting or proving CIB activity.

Inauthentic Metrics

We have studied in detail the inauthentic metrics in a previous blogpost. This criterion can appear in both the potential impact assessment and the authenticity assessment. Inauthentic metrics can be for instance posts having more shares than views, accounts having too many or too few followers compared to their activities or nature, sudden spikes or drop of metrics, such as likes, followers, etc. See the potential impact branch for more details[8].

The Inauthentic Language Behaviour

Inauthentic language behaviour is a useful and easy criterion to assess inauthenticity. In looking for inauthentic language behaviour it will be useful to look specifically if

- The account is liking or commenting a content in a totally different language or alphabet from the original post

- The account is commenting and posting in a language / alphabet different from the one used in its biography

- The account drastically changes language and alphabet several times

- The account can use several different languages with different non-Latin alphabet

- The account repeatedly misspells words or makes repeated grammar or syntax errors, especially in languages the account is supposed to be fluent in.

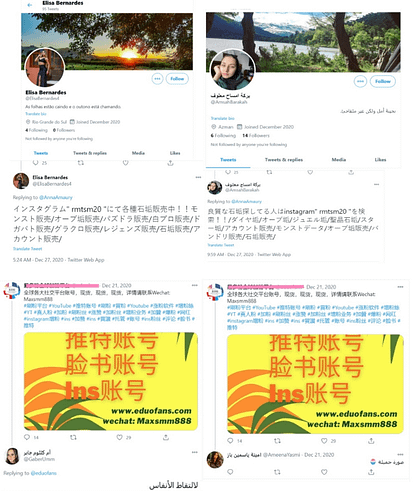

After the release of our investigation on the Indian Chronicles, the EU DisinfoLab was the target of a CIB campaign. The accounts involved where showing typical signs of inauthentic language behaviour.

In this instance, all the users suspected of employing inauthentic language behaviour can be found. Here are screenshots of some of these accounts. One can see accounts with Spanish or Arabic bio answering in non-Latin alphabet and Asiatic language, or accounts answering in Arabic to non-Latin Asiatic language.

Figure 1: Screenshots of accounts with Spanish or Arabic bio answering in non-Latin alphabet and Asiatic language, or accounts answering in Arabic to non-Latin Asiatic language

In Graphika’s investigation on Spamouflage Returns, they reveal another case of inauthentic language behaviour: language shifting. The pages changed language and name at the same time, suggesting inauthenticity and repurposing.

“Throughout the first months of 2019, this posted uplifting English-language comments and images of scenery. As the initial wave of takedowns hit the network, it shifted to a characteristic blend of Chinese-language political posts”[9]

Changing the name of a page may indicate inauthentic behaviour but it is not the strongest criterion. A sudden change in language is a stronger indicator of inauthentic behaviour but also not sufficient. Changing both name and language at the same time mutually reinforces the two criteria.

Of course, all these behaviours are not alone a proof of fakery. It is always possible that someone speaks and writes perfect Arabic, Cyrillic and Indonesian and likes to comment in Arabic promotion and commercial advertising wrote in Japanese. But these abilities are normally not common and if they are seen in multiple accounts connected, there is a chance this is a CIB network. Inauthentic language is a useful indicator because CIBs are often re-used or involved in different campaigns.

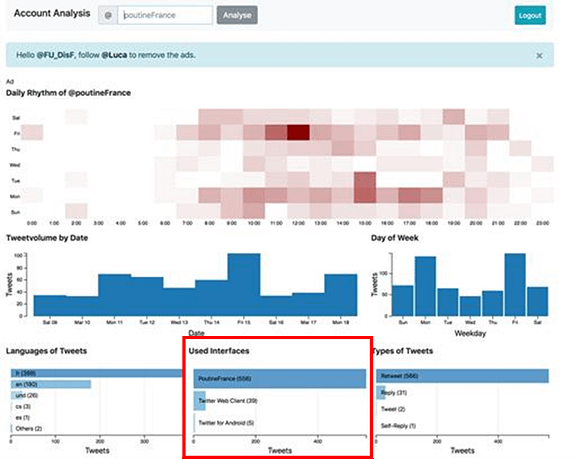

Research for Automated Syndication

The use of an automated tool for posting can be helpful to assess inauthenticity. It will appear in some tools like in the “posting tools” of TruthNest[10] or the “used interface” of the Account Analysis[11] app for instance.

In our investigation called “FandeTV”, which was realized in partnership with Le Monde, the fact that multiple Twitter accounts used very specific IFTTT interfaces[12] (“Poutine France”, “J’ai Raison”, “RTPoissonMariton”) to publish automatically most of their tweets was part of the first of signs that pushed us to investigate this network for possible coordinated inauthentic behaviours. We used “Account Analysis” to detect these automated behaviours:

Figure 2: Screenshot from “Account Analysis” to help investigate automated posting behaviours

The use of a tool for automated posting is not always a sign of CIB, most of the time it is used by normal users to have their blogpost displayed automatically on the social networks, but this criterion can become valuable if other signs of CIB have been detected.

Dissimulation Assessment

Inauthentic Profile Picture Behaviour

In the first branch of the CIB detection tree, we suggested looking at changes made around profile pictures to detect some abnormal behaviours like simultaneous updates of profile pictures for multiple accounts. It is common for CIB to repurpose accounts so the change of profile picture at the same time may appear. The profile picture behaviour may reveal coordination by the similar origin of fake picture. CIB networks tend to use the same pattern. Profile pictures may have been uploaded the same day, originate from the same pool or have been changed at the same time. These will be indicators of coordination and useful to the coordination assessment.

But the profile picture behaviour is also a good indicator of inauthenticity for a single account. CIBs are not always based on fake accounts and an account with a fake picture is not always a CIB, but it is safe to assume that most of CIB campaigns will involve fake accounts and a common feature is the use of a fake picture. It is also common to use easy techniques and not to be too subtle in their profile picture behaviour. Most of the time CIBs are designed to be quantitative not qualitative.

Things to look for when assessing profile pictures:

- similar look of the profile picture (positioning, themes, look and smile etc.)

- similar landscape background (and if it is flouted or not)

- eyeball positions

- ears differences

Ears differences and eyeball position will give away GAN generated pictures. In its investigation Spamouflage goes to America”[13], Graphika superimposed all the profile pictures of the accounts involved in the network to show how the eyeball positions were the same.

Figure 3: superimposition of Profile pictures of YouTube accounts commenting a video identified as material from the network

It is also useful to run a reverse image search on profile pictures and cover pictures to make sure the images were not stolen from legitimate accounts or extracted from online image data base.

It can also happen that something on the picture gives away the origin. In the CIB campaign against the EU DisinfoLab for instance, one could find original logos on the profile pictures used by the network. In this network we were also able to find a pattern in the profile and / or cover picture behaviour. Cover pictures are taken from the internet and then cropped. They could be found back using reverse image search.

Figure 4: Finding profile cover pictures using reverse image search tools

Profile pictures are also taken from the web. Looking for that name on the web led us to the Instagram account from which the profile picture was taken. The Instagram account had nothing to do with the inauthentic account on Twitter used in the campaign against EU DisinfoLab.

If a fake picture is detected, it is useful to check if the profile also uses a fake name and vice versa. Once a pattern of inauthenticity is detected, it is useful to check if the other suspected accounts also use the same pattern.

Finding the account creation pattern will prove to be a great asset for proving inauthenticity.[14] In the EU DisinfoLab report on the Indian Chronicles, we discovered a specific pattern was used in the names of the network for dissimulation. The Indian Chronicles network was using the names of dead people, dead organisations, or former interns. We then investigated the assets of the network following this pattern:

- When we found the name of an organisation, we checked if it was a defuncted organisation.

- When we found a potential fake name, we looked for existing occurences (which could be the name of a deceased person or a former staff). We also looked for small changes in the spelling of the name.

Following this pattern proved to be successful most of the time (see next criteria naming conventions).

Similar Profile Picture, Cover Picture or logo

Checking the similarity of the logos is useful to establish coordination among a network or to discover duplicated accounts. In a leaked Facebook report published by Buzzfeed[15], we learned that Facebook identified coordination and the internal hierarchy of the Patriot Party network by investigating the similar logo.

Not all movements will have common branding, but when they do, it is a clear sign of coordination. PP [Patriot Party] Groups and Pages used the same or similar logos to identify official sources.

Checking for same or similar profile picture account pictures is also useful to find duplicated accounts. The Avaaz study on Far Right Networks of Deception found that one technique used by far right networks to amplify content was to set up duplicate accounts of the same people. It is a violation of some platform policies. Facebook for instance, forbids the use of multiple accounts.

Twitter allows multiple accounts but bans it under certain conditions to “operating multiple accounts with overlapping use cases, such as identical or similar personas or substantially similar content; operating multiple accounts that interact with one another in order to inflate or manipulate the prominence of specific Tweets”.

The Avaaz study found that duplicate or overlapping accounts could be sometimes sharing or using the same picture[16]. Even more, the overlapping and duplicated accounts or pages would sometimes use the same logo while displaying different names.

Figure 5: Screenshot from Avaaz study on Far-Right Networks of Deception showing multiple pages using the same logo and sharing the same content

Inauthentic name behaviour

Changing names of an account or page can be an indicator of repurposing. Especially if the asset is switching between completely different subjects.

This technique is called “bait and switch” by Avaaz. They describe it and give examples in their report Far Right Networks of Deception:

Bait and switch: An example is when networks create pages with names that cover nearly the entire spectrum of popular interest: football, beauty, health, cooking and jokes. Once the audience is built, the page admins appear to deliberately start boosting political or divisive agendas, often in a coordinated way, on pages that, at face value, should be completely unrelated to politics.[17]

Name Change

This technique is called Recycling followers by Avaaz (alongside “Bait and Switch”). Deceptive name changes are a key tactic used by many pages. For example, pages that have originally started as lifestyle groups, music communities or local associations are being turned into far-right or disinformation pages, “recycling followers” and serving them content completely different than what they had initially signed up for”.

Graphika, in its report “Spamouflage Goes to America”[18] used Vidooly to track name changes. Graphika showed that the account displayed the same profile picture but a different name on YouTube and Vidooly. This was the sign the account changed its name:

“Typically for the network, most of the videos were not viewed at all, or very seldom. However, one post from an account called 风华依旧 recorded over 14,000 views, but no comments. The account was created in 2016 and apparently received over 360,000 views in total, but when Graphika accessed it, it only showed five videos with a total of some 26,000 views. According to online tool vidooly.com, the account (listed by its user ID) was originally called “Karolyn Tindal.” These factors suggest that the account was repurposed from a prior user; the viewing figures should be regarded with skepticism.”

Graphika then manually examined an account’s posting behaviour. First, they determined the periods of posting, interrupted by a small or long period of silence. Then Graphika analysed:

- the content of the video posted,

- their duration,

- the frequency of posts,

- the metrics of the video.

These criteria are detailed in the three other branches of the tree.

Naming Conventions

In its study Reply All: Inauthenticity and Coordinated Replying in Pro-Chinese Communist Party Twitter Networks, ISD looked at the naming conventions of the accounts of its network.[19]

“Some accounts are made up of a generic name followed by a sequence of numbers, whereas others are an alphanumeric combination, and others obeyed patterns, where specific characters remain consistent and there are only changes in the sequence of numbers. These accounts are also usually clustered by similar blocks of creation dates.”

Figure 6: Screenshot of ISD study showing the naming conventions of the CIB network

Together with checking the change of names, it is useful to check if pages have merged and if some posts have been deleted and removed together with the change of name. Repurposed accounts tend to delete their previous post when changing names. In the EU DisinfoLab investigation on La Verdadera Izquierda, we discovered the repurposed accounts were cleaned up of all the content before being sold and renamed[20].

Monitor Known Inauthentic Suspects

Specific online spaces (marketplaces, forums, etc.) are known as places where Coordinated Inauthentic Behaviour campaigns are organised. It is always good to have a look at these spaces, either one already has a working lead one wants to follow, or just for doing random regular watch.

There are two ways to check for known inauthentic suspects, either by going after inauthentic accounts or by going after the coordination of accounts.

Check known websites of fake accounts resellers

After the investigation on La Verdadera Izquierda, the Spanish newspaper el Diario ran an investigation. They discovered that the accounts we identified as CIB were also part of an account reselling in the black market.

The El Diario article titled “Social network accounts sold for political propaganda, the reason here”[21] investigates how Facebook, Twitter, and Instagram accounts, previously created, and actively used to gain followers, are sold in some internet forums, such as Milanuncios or ForoBeta, as well as eBay for video game accounts. This black market openly goes against the social media platforms’ terms of service, which prohibit the purchase and sale of accounts. The buyers do not usually know the origin, background, history, and methods used to build an audience for these social media accounts. Most of the time, it is possible to find traces connected to the account’s original owner.

Figure 7: Screenshot from El Diario article showing the selling of an account

These places are where inauthentic accounts are bought and sold so they will be filled with inauthentic accounts.

Check CIB campaign organisers

The other places to look for CIB are places where CIB campaigns are devised or organised (online forums, for instance). We already spoke about looking for specific keywords for tracking CIB in our first blogpost[22], the Coordination Assessment, sub-section “Identifying Keywords Used to Rally”. We mentioned that looking for the keyword “Raid” in the infamous French Forum 18-25 would unveil discussions of harassment campaigns against a specific target.

It is also worth checking other places or communities known for organising platform manipulation campaigns, CIB or harassment campaigns. The Open-source tool SMAT, Social Media Analysis Toolkit was “designed to help facilitate activists, journalists, researchers, and other social good organizations to analyze and visualize larger trends on a variety of platforms”.[23]

This tool could be an asset for that specific criterion as SMAT allows one to investigate platforms that are known to be used for coordinated campaigns. Especially SMAT can perform searches on 8Kun, Gab, Parler, 4chan.

Figure 8: Screenshot of SMAT tool running a research of the keyword “QAnon” on Gab, Parler and 8kun

Any tool that can be used to check on these platforms will be useful in that specific criterion and may lead to identify organisation of CIB campaigns.

CONCLUSION

This is the last branch of the CIB detection tree[24]. In the other branches, we explored other criteria that can be used to assess coordination, for instance track the source of a CIB campaign, research similar narratives or check the posting behaviours.

This tree was elaborated through careful study of the methodology used by non-platform actors to track CIB and the careful study of the takedown reports and anti-CIB policy used by the platforms. As with any tool involving assessments, OSINT techniques or open-source investigation methodologies, entering the world of assessing CIB is entering a world of false positives, misleads and second guessing. This tree is designed to assist researchers and non-platform actors in their investigation. It was designed with automation in mind. Part of the tasks described here could be automated and have been designed to assist human investigations. However, this tool was designed bearing in mind that humans are doing the hard work and only humans can eliminate false positives and misleads. We do believe that even completed with all 4 branches, the CIB detection tree is perfectible and we welcome feedback, comments, and additions from the community. We also believe it will help researchers to populate their reports with a language and examples that the platforms can speak and understand. Because platforms engaged themselves in removing and acting against CIB, this tree can be used as a detection tool and as a tool for proving CIB, in a particular network already under scrutiny.

[1] https://about.fb.com/news/2018/12/inside-feed-coordinated-inauthentic-behavior/

[2] https://slate.com/technology/2020/07/coordinated-inauthentic-behavior-facebook-twitter.html

[3] https://www.disinfo.eu/publications/cib-detection-tree1/

[4] https://www.disinfo.eu/publications/cib-detection-tree2/

[5] https://www.disinfo.eu/publications/cib-detection-tree-third-branch/

[6] https://about.fb.com/wp-content/uploads/2021/05/IO-Threat-Report-May-20-2021.pdf

[7] https://blog.twitter.com/en_au/topics/company/2019/Spamm-ybehaviour-and-platform-manipulation-on-Twitter#:~:text=The%20Twitter%20Rules%20state%20that,similar%20content%20across%20multiple%20accounts

[8] https://www.disinfo.eu/publications/cib-detection-tree-third-branch/

[9] https://public-assets.graphika.com/reports/Graphika_Report_Spamouflage_Returns.pdf, P5

[10] https://www.truthnest.com/

[11] https://accountanalysis.app/

[12] IFTTT (“If This Then That”)

[13] https://public-assets.graphika.com/reports/graphika_report_spamouflage_goes_to_america.pdf

[14] https://www.disinfo.eu/publications/indian-chronicles-deep-dive-into-a-15-year-operation-targeting-the-eu-and-un-to-serve-indian-interests/

[15] https://www.buzzfeednews.com/article/ryanmac/full-facebook-stop-the-steal-internal-report

[16] https://avaazimages.avaaz.org/Avaaz%20Report%20Network%20Deception%2020190522.pdf p.15 – 16

[17] https://avaazimages.avaaz.org/Avaaz%20Report%20Network%20Deception%2020190522.pdf p.12

[18] https://public-assets.graphika.com/reports/graphika_report_spamouflage_goes_to_america.pdf

[19] https://www.isdglobal.org/wp-content/uploads/2020/08/ISDG-proCCP.pdf

[20] https://www.disinfo.eu/publications/la-verdadera-izquierda-and-the-sale-of-accounts-on-the-black-market/

[21] https://www.eldiario.es/tecnologia/compraventa-cuentas-twitter-facebook_0_1036596712.html

[22] https://www.disinfo.eu/publications/cib-detection-tree1/

[23] https://www.smat-app.com/timeline

[24] https://www.disinfo.eu/publications/cib-detection-tree1/