This blog post is part of a series on COVID-19. Please have a look at our dedicated hub and subscribe to our mailing list of updates.

Printable PDF version here (dated 03/04)

- update on 29/09 to include Facebook policy on groups, and enforcement updates from YouTube and Twitter

- update on 13/05 to include Twitter policy update

- update on 05/05 to include Linkedin, update on Twitter and enforcement from Facebook

- update on 27/04 to include updates from Facebook, Google, Twitter, and YouTube

- update on 23/04 to include updates on enforcement from Facebook and Twitter

- update on 17/04 to include updates from Facebook and TikTok

- update on 14/04 to include analysis of Facebook and Youtube policies enforcement on 5G and Coronavirus conspiracies

- update on 09/04 to include TikTok and WeChat

- update on 07/04 to include WhatsApp, Google and YouTube’s policy updates.

- update on 06/04 to include Telegram’s policy updates.

- update on 01/04 to include Twitter’s policy updates.

EU DisinfoLab has collaborated on a UNESCO/ITU Broadband Commission study analysing global responses to disinformation in the context of freedom of expression and access to information challenges. Read the report here.

We analysed the blogposts, statements, and updates in policies from the platforms to combine a comprehensive overview of the measures undertaken. As the situation is evolving quickly, we will update this page regularly. In case you would like to flag any update or inconsistencies, please feel free to reach out to info@disinfo.eu.

In the context of the COVID-19 crisis, misinformation on the epidemic has been flooding the internet. The online platforms have been quick to react and demonstrated their ability to take action to limit the spread of such content. Major tech companies even published a common industry statement endorsed by Facebook, Google, Linkedin, Microsoft, Reddit, Twitter, and YouTube, in a move to jointly combat fraud and misinformation on their services.

Overall, it is remarkable how tech companies have been working closely with public health authorities and governments to face the crisis situation. With such a role lies also an immense responsibility over the public discourse, which should be reflected in any future regulation.

From our analysis, we were able to draw and highlight five trends in platforms policies regarding disinformation on Covid-19, which are further detailed in the platforms’ policies described below.

Surfacing and prioritizing content from authoritative sources

Most of the platforms we analysed are redirecting users to authoritative sources of content. Searches on Covid-19 surface content from public health authorities, and dedicated information sections and banners are used to highlight information from health agencies. In addition to that, the World Health Organisation partnered with several services to create dedicated channels like on Whatsapp, and Snapchat.

Closer cooperation with fact-checkers and health authorities for flagging this informative content to proactively remove disinformation.

In addition to their partnership with fact-checkers, the platforms rely on public health authorities to flag disinformation related to the epidemic. Based on this information, the companies committed to proactively remove content promoting false cures, denial of public health authorities recommendations and proven scientific facts.

Increased use of automated content moderation

Social distancing for preventing the spread of the virus has forced thousands of moderators to work from home. To face this, platforms chose to increase the use of AI filters to moderate content, thus leading to a large number of bugs and false positives.

Offering free advertising to authorities

The World Health Organisation and national health authorities have been granted free advertising credit by Facebook and Twitter, and have been offered help by Google to run ads.

Dealing with scams

The platforms also had to ban advertising trying to benefit from the crisis. Nonetheless, many scams passed the filters, leading law enforcement and consumer authorities to warn consumers and call on the marketplaces to react.

Information available on 25/03

Facebook and Instagram

Facebook’s policies on coronavirus misinformation can be found here and here.

Promoting reliable information

Searches on information and hashtags related to COVID-19 on Facebook and Instagram surfaces educational pop-ups and redirects to information from the World Health Organisation and local health authorities. The WHO has also been granted free advertising on the platforms and some partner organisations have been given extra advertising credits.

In July 2019, Facebook announced the creation of an information center called “Facts about Covid-19”, a panel providing authoritative information and debunks on most common misinformation about Covid19. The content is provided by WHO.

Preventing misinformation

In this context, the company is implementing its existing policies. Facebook relies on the work of third-party fact-checkers to debunk false claims related to COVID-19, limiting the spread of content verified as false. People trying to share an article verified as false are also notified it has been fact-checked.

In addition, false claims flagged by public health authorities are removed. This includes “claims related to false cures or prevention methods — like drinking bleach cures the coronavirus — or claims that create confusion about health resources that are available”. Also, the company committed to removing hashtags used to spread disinformation on Instagram. The company has also banned ads that imply a product guarantees a cure or prevents people from contracting COVID-19.

In the meantime, Facebook with the urgency and staff working from home, Facebook increased moderation heavily and relied on automated filters, inevitably leading to more errors, as the company warned in a blog post. As anticipated, this situation led to content from authoritative sources, such as BBC, being wrongly removed or prevented from uploading.

As of 16/04, Facebook will now inform users if they have retroactively engaged with COVID-19 mis/disinformation on the platform.

Facebook has removed its “pseudoscience” category from the list of categories advertisers can use to target people, following a The Markup investigation.

On 17/09, Facebook announced new measures to prevent misinformation in Facebook groups. These include the capacity to prevent the creation of new groups by people that have already committed policy violations. Health groups have also been removed from algorithmic recommendations. Eventually, Facebook announced it will apply to Groups its “remove, reduce, inform” policy, meaning that Groups could be removed or their reach reduced if they are repeatedly sharing misinformation.

On 13/10, Facebook announced a new policy aimed at vaccine falsehoods, including a ban on ads that discourage vaccination. They will work with global health partners to increase immunization and encourage flu shots. This policy notably does not apply to groups, however.

Enforcement

On 30/03, Facebook removed a video of President Jair Bolsonaro of Brazil endorsing hydroxychloroquine and calling for an end to social-distancing efforts, from its main social network and Instagram. According to the company, the video went against its community standards banning disinformation that could lead to “physical harms”.

In the UK, Facebook removed two anti-5G groups on 13/04, after members encouraged the destruction of mobile phone masts in breach of the network’s policies on promoting violence. In the meantime, groups sharing videos removed from Youtube for sharing conspiracy theories on 5G and Coronavirus remain active. Indeed, if some videos have been removed, we observed that content moderation has been inconsistent across platforms and functions, with banned content being shared on Facebook from mobile to the desktop version, as well as for sharing content from one platform to another.

On 20/04, Facebook announced it has removed events in several US States, promoting protests against stay-at-home measures amid the COVID-19 pandemic. The company said it would only take down anti-quarantine protest events if they defied government guidelines.

On 01/05, Facebook has removed the page of conspiracy theorist David Icke for violating the company’s policies on harmful misinformation. The Guardian noted that a secondary account with more than 68,000 followers remained active (on 01/05). A verified account for him also remained on Twitter.

With families, colleagues and relatives increasingly communicating digitally, Whatsapp has been one of the main platforms for conspiracy theories, unverified advice and disinformation going viral on the epidemic. The platform has set up a “Whatsapp Coronavirus Information Hub” in partnership with WHO, UNICEF, and UNDP and suggests usages of the platform for community help during the crisis. In addition, the messaging platform worked with the World Health Organisation to open a dedicated channel with a bot to automatically answer questions and provide reliable information to users. In the meantime, Facebook’s company has announced a 1Million support grant for fact-checkers (see the section on fact-checking responses). According to a WhatsApp spokesperson, the company is currently working on AI to remove spam accounts. Recently, WhatsApp announced that it will further limit message forwarding in an effort to combat the spread of misinformation on the app.

source: Facebook

Google and YouTube

See Google’s COVID-19 policies here.

Promoting reliable information

Google partnered with the US government in developing a website dedicated to Covid-19 education and information. Google homepage also promotes measures to limit the spread of the virus such as washing hands. Google search also includes an “SOS Alert” promoting information from official sources on the symptoms and prevention of the disease.

On 06/04, Google announced the rollout of a COVID-19 hub in Google news. The hub organises news from authoritative sources, by region and by topics (economy, health, travel,…).

On Youtube, videos from public health agencies appear on the homepage. The video-sharing platform also highlights content from authoritative sources when people search for information on COVID-19, as well as information panels to add additional context.

On 14/10, YouTube announced the expansion of its medical misinformation policy to include Covid-19 vaccine information that contradicts health authorities. See YouTube’s COVID-19 Medical Misinformation Policy Hub here.

Preventing misinformation

Google is actively removing disinformation from its services, including YouTube and GoogleMaps. On YouTube, it removes videos that promote medically unproven cures. In addition, it prevents ads that try to capitalize on the situation. Recently, YouTube has announced it will remove videos that groundlessly link 5G to COVID-19. On 22/04, YouTube announced it had banned “medically unsubstantiated” videos from its platform.

Enforcement:

Following a live-streamed interview with conspiracy theorist David Icke on 06/04, in which he had linked the technology to the pandemic, Youtube announced it will ban conspiracy theory videos falsely linking coronavirus symptoms to 5G networks. We traced back the diffusion and moderation enforcement of such a video. It has been indeed removed from Youtube, but we observed that content moderation has been inconsistent across platforms and functions, from mobile to the desktop version, as well as for sharing content from one platform to another.

A study from the Oxford Internet Institute stated that it took on average 41 days to remove a Covid-19 misinformation video on the platform.

See Twitter’s strategy against COVID-19 misinformation here.

Promoting reliable information

Twitter has set up a Covid-19 event page with the latest from trusted sources to appear on top of the timeline. The company has also worked on verifying accounts with email addresses from health and public institutions to provide reliable information on the topic.

The platform announced it is working with UNESCO on a joint campaign to help users critically assess the credibility of the information they come across and share on the platform.

Preventing misinformation

Similar to Facebook, Twitter anticipated an increase in automated filtering to prevent the massive spread of Covid-19 related information on its platform, assuming it could lead to inevitable errors.

In addition, the company broadened the definition of harms on the platform, to include denial of public health authorities recommendations, description of treatment known as ineffective, denial of scientific facts about the transmission of the virus, claims that Covid-19 intends to manipulate people and related conspiracy theories, incitement to actions that could cause widespread panic, or claims that specific groups would be more or never susceptible to Covid-19.

Based on its Inappropriate Content Policy, Twitter aims to halt attempt by advertisers to opportunistically use the COVID-19 outbreak to target inappropriate ads for example by prohibiting ads for Medical masks.

On 23/04, Twitter announced it will delete “unverified claims” on 5G and Coronavirus that could lead directly to the destruction of critical infrastructure or cause widespread panic.

On 24/04, Twitter announced that it would remove tweets or trends about #COVID19 that include a call to action that could potentially cause someone harm. For example, the Trends #InjectDisinfectant and #InjectingDisinfectant were subsequently now blocked.

On 29/04, Twitter announced the launch of a free COVID-19 stream endpoint for qualified devs & researchers to study the public conversation in real-time.

Starting on 11/05, Twitter has introduced a new policy to label tweets that contain COVID-19-related misinformation. The new labels will link to a page curated by Twitter or to an “external trusted source” that can provide information about the claims made in the tweet.

Enforcement

Enforcing its policy, Twitter has removed tweets by the presidents of Brazil and Venezuela and former New York City Mayor Rudy Giuliani for violating its ban on content that “goes directly against guidance from authoritative sources of global and local public health information.”

On 22/04, Twitter claims ” Since introducing our updated policies on March 18, we have removed more than 2,200 Tweets containing misleading and potentially harmful content from Twitter. Additionally, our automated systems have challenged more than 3.4 million accounts which were targeting discussions around COVID-19 with spammy or manipulative behaviors. “

On 28/07, Twitter did ask Donald Trump Jr. to remove a tweet containing Covid-19 disinformation.

Snapchat

Snapchat has used its “Discovery” function to highlight information from partners. This section of the platforms provides vetted content from editors and advertisers. WHO partnered with the platform to create filters and stickers that promote tips and guidance on how to prevent the spread of the virus. In addition, the organisation has a dedicated official account to provide updates and information to the users. For helping teenagers cope with social distancing, Snap has rolled out a dedicated mental health feature.

The forum has positioned a dedicated subreddit page as a reliable source of information with posts being verified by moderators and contributions from renowned scientists.

Telegram

On April 03, the company announced a new process to verify channels from official governmental sources to broadcast information to a large audience. The platform said it sent notifications to users in countries offering such official channels. In addition, searches for “Cornavirus” now redirects to a dedicated channel which contains a list of news sources by country.

On a lighter note, Telegram now offers masks stickers to add to pictures.

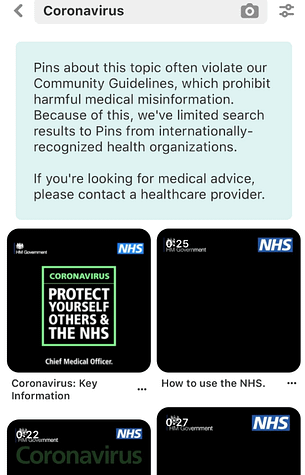

Pinterest has a very strict health misinformation policy, initially adapted to anti-vaxx content. Its community guidelines strictly ban health misinformation from the platform. For this reason, everyone searches for “Coronavirus” on the platform sends to a dedicated page with information from the World Health Organisation. In addition, Pinterest rolled out a new tab called “Today tab” to show users created and trending topics. These days, the company presented information related to Covid-19, in partnership with the World Health Organisation. The company is also preventing ads from trying to benefit from the crisis, yet several scams were found on the platform.

Tik Tok

When users search for Coronavirus on Tik Tok, they have presented a WHO information banner. The World Health Organization has joined the platform to provide information videos to users. TikTok has also made changes to its policy on direct messaging.

We Chat

Wechat has taken a completely different approach to the crisis, removing most of the Coronavirus-related content, disregarding the veracity of the information shared through automated filtering of a combination of keywords. This policy has been criticized by human rights organisations.

Linkedin has set up a dedicated information page “Coronavirus official updates and sources”, curated by Linkedin editors.