by Maria Giovanna Sessa

This blogpost is part of the EU DisinfoLab Covid19 hub, an online resource designed to provide research and analysis from the community working on disinformation. These resources highlight how the COVID-19 crisis has impacted the spread of disinformation and policy responses.

Edited on 28/10 to update Facebook link to HCQ policy. The original link was pointing there.

- Our research shows loopholes in the enforcement of Facebook policies, indicating a discrepancy in consistent enforcement. We especially point to the fact that labelling of fact-checked disinformation on hydroxychloroquine (HCQ) can easily be overturned and lead to similar stories circulating without any labelling or context.

- We also show how disinformation is by nature a cross-platform issue, with content hosted on other online repositories (websites, YouTube videos, tweets quoted, etc.), emphasising the need to move away from a platform-per-platform approach, and focus instead on addressing disinformation as systemic issue. Very few steps have been taken into that direction.

- In specific cases of data voids or insufficient authoritative content, such as the initial claims of the benefits of HCQ in COVID-19 prevention, online platforms are even more than usual dependent on authoritative content. In order to improve the response to future crises, there is a need to map potential issues or threats where authoritative references will be needed as a strong reference.

- As we concluded in the new ITU/UNESCO study on disinformation, co-authored by the EU DisinfoLab, Facebook and in general large platforms must avoid granting exceptions to certain kinds of users and content (too big to moderate), their content moderation and curatorial responses must become transparent, measurable, protect human rights and be implemented equitably on a global scale. The platforms have the potential to do this, but currently, this is not the case.

Once again, our research remains very constrained by a lack of meaningful data to effectively check the accountability of the platforms. More transparency on the labelling of online adverts, (i.e., labelling ads as social or political issues), content flagged to third parties and verifiable audience figures as well as enforcement results of this policy is needed.

In the past few months, Facebook reiterated its commitment towards containing the diffusion of unsubstantiated medical claims on how to prevent or cure COVID-19, which it claims to apply to controversial or discredited claims regarding hydroxychloroquine (HCQ).

Since the formulation of the policy in mid-July, EU DisinfoLab collected numerous posts from various Facebook pages and publicly accessible groups, which are all endorsing the alleged efficacy of HCQ. While some of the selected content appears to have been fact-checked or labelled, this was not the case for a significant number of posts. The latter did not show any outward signs that action had been taken to enforce the policy, despite the posts promoting false or misleading narratives within what seems to be highly polarising Facebook groups and pages, in recurrent violation of Facebook’s own policies.

Moderation challenge

COVID-19 presented social media sites such as Facebook with probably the most formidable challenge that they have faced since their inception – that of moderating and fact-checking what the World Health Organisation (WHO) dubbed an “infodemic”. The latter was defined as “an over-abundance of information – some accurate and some not”.

An added challenge came from the dynamic nature of authoritative scientific knowledge, which evolved and changed dramatically since March 2020 as a result of clinical trials and trustworthy medical evidence collected since. Accordingly, so did authoritative advice and government regulations. Consequently, some information posted back in April 2020 could be correct and in line with scientific understanding at the time, but can no longer be considered correct at the present time, when evaluated against the latest WHO and other authoritative advice.

From some of the very early stages of the pandemic, a particularly contested and polarising case has been hydroxychloroquine. The WHO advises that: “Current data shows that this drug does not reduce deaths among hospitalised COVID-19 patients, nor help people with moderate disease.” It also adds that: “More decisive research is needed to assess its value in patients with mild disease or as pre- or post-exposure prophylaxis in patients exposed to COVID-19.”

Hydroxychloroquine posts on Facebook

On July 15th, Facebook upgraded its content moderation policy to include scientifically debatable claims in support of the use of hydroxychloroquine as a cure of COVID-19:

“To further limit the spread of misinformation, this week we are launching a dedicated section of the COVID-19 Information Center called Facts about COVID-19. It will debunk common myths that have been identified by the World Health Organization such as drinking bleach will prevent the coronavirus or that taking hydroxychloroquine can prevent COVID-19. This is the latest step in our ongoing work to fight misinformation about the pandemic.”

In addition, Facebook intensified its commitment to sending suspected COVID-19 misinformation for verification by third-party fact-checkers and removing content making false claims about cures and prevention, as well as directing users who interacted with such posts towards authoritative information sources such as WHO COVID-19 guidance.

While the vast scale of the Facebook user base makes it impossible to moderate all content posted on the site, we carried out an analysis of a random sample of HCQ-related posts published after July 15th. We focused exclusively on posts that contained external links to articles or videos, which were not moderated or labelled and were still available at the time of writing (25 September 2020). The content originated from Facebook pages with significant audience reach of at least 10,000 followers and from public Facebook groups with at least 1,000 members. In addition, we focused specifically on Facebook accounts and groups that regularly posted about HCQ rather than it being a one off occurrence.

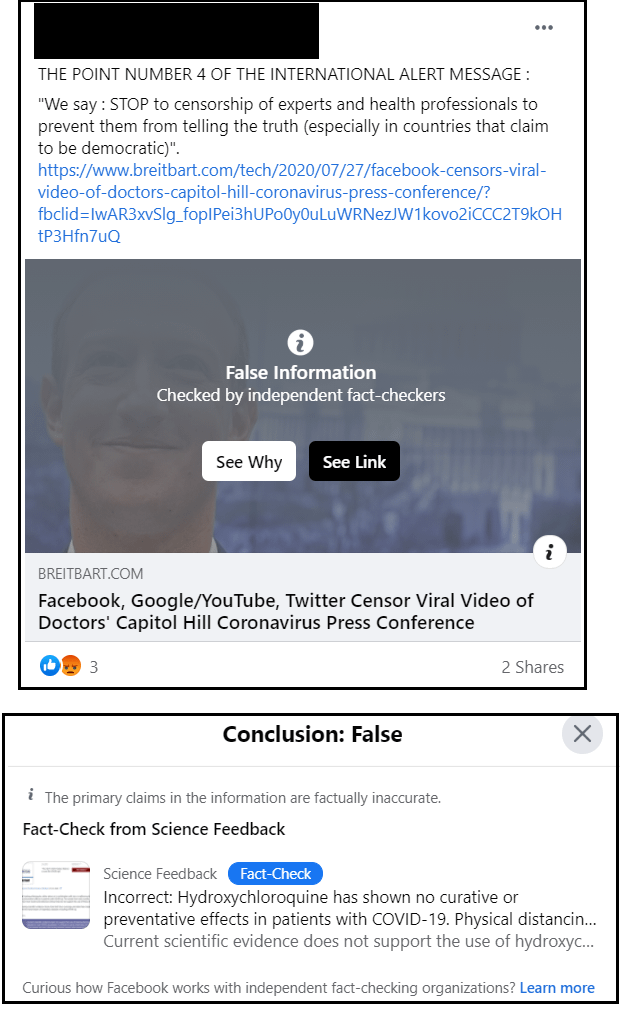

Once independent fact-checkers have flagged content as misinformation, this is shown in Facebook through a label that informs users about the inaccuracy. Users who shared the item in the past are notified, as well as those who try to share it in future. A link to a fact-checked article is provided with additional context that explains why the content is labelled as “false”, “party false” or “altered” (see Figure below). Furthermore, Facebook’s algorithms are supposed to reduce the visibility of disinformation in the news feed to limit its spread.

On July 27th, Facebook removed a viral live-streamed video of a press conference in Washington DC held by a group of self-proclaimed doctors called “America’s Frontline Doctors”, who endorsed the use of HCQ as a cure for COVID-19. The video, posted by the far-right news outlet Breitbart, was found in breach of the platform’s guidelines on false information about cures and treatments for COVID-19. Furthermore, a follow-up article (Figure 1) that reiterated the efficacy of the anti-malarial drug and accused Facebook, YouTube and Twitter of censorship, was labelled by independent fact-checkers as disinformative, since “these claims are, at best, unsupported”.

Despite these fact-checking efforts however, we were able to find posts by other outlets such as Regulatory Watch, The Right Scoop and America First Projects that quote Breitbart verbatim and have not been labelled as containing false information. As these examples illustrate, Facebook’s approach to fighting misinformation on the platform can still easily be circumvented, since their fact-checking policies operate on a claim-by-claim basis. This does not prevent the same misinformation from spreading through other users or groups on the platform while reaching wide audiences.

Indeed, such posts and accounts do have an enormous reach, as demonstrated by the following four high-impact, unmoderated Facebook posts on HCQ. These clearly show the significant limitations of Facebook’s current content moderation policies underpin an urgent need for a consistent application.

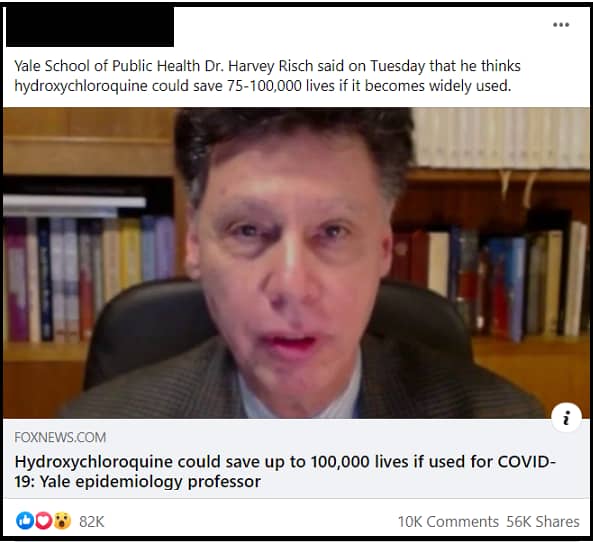

Our first example is this Fox News article, which argues in favour of preventative use of HCQ, in direct contradiction to current WHO information that further research is required into prophylactic use of HCQ. According to CrowdTangle, there were almost 899.000 Facebook interactions with this post, i.e. around 579,000 reactions, 144,000 comments and 175,000 shares.

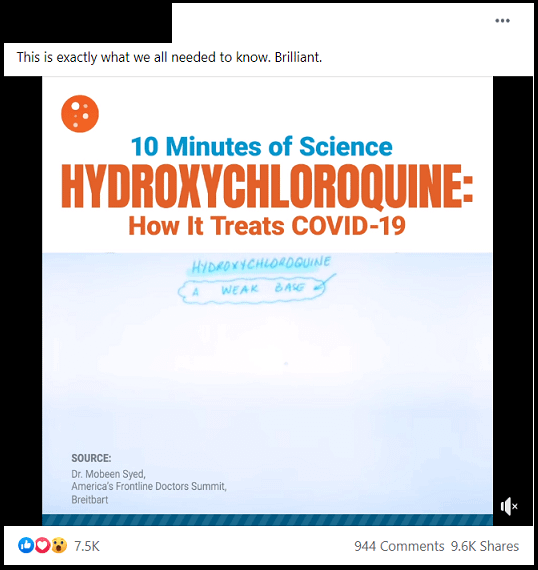

The second example is a video from the America’s Frontline Doctors Summit, which argues that HCQ allegedly treats coronavirus. As this video propagates misinformation about COVID-19 treatments, it should have been either removed or labelled accordingly – that is, if Facebook’s policies were applied rigorously. Instead, the video went on to collect 75,000 reactions, almost 1,000 comments and was viewed 1.6 million times.

The third example is of a polarising narrative around HCQ, which seeks to discredit a factually correct news article from AOL. The AOL article itself reports that a pro-HCQ video tweeted by President Trump and his son was labelled as “false information about cures and treatments for COVID-19” and removed by Twitter, Facebook and YouTube in line with their policies. This HCQ support page, however, shared it in the false context of a strongly hyperpartisan, polarising narrative which aims to deepen political divisions on HCQ and to discredit scientists and medical professionals. According to Crowdtangle, this particular polarising post has since gathered over 241,000 interactions, 133,000 reactions, 71,000 comments and 37,000 shares on Facebook.

Our fourth example is a post, sharing a YouTube video, which falsely claims that Big Tech and mainstream media are carrying out a coordinated disinformation campaign and censoring Trump’s pro-HCQ tweets. According to the video, rejecting HCQ as a COVID-19 treatment is a ploy to protect the profits of pharmaceutical companies that are working towards COVID-19 vaccines. The shared video was viewed 23,000 times.

What these examples demonstrate clearly is that Facebook is still leaving ample open avenues for online communities to circumvent Facebook’s policies on misinformation, which then leads to continuous, high-impact proliferation of false narratives around HCQ and COVID-19.

This flaw arises from Facebook’s approach, which only sends posts for verification to third party fact-checkers if they are either flagged by Facebook’s algorithms or are reported by users as potentially violating community standards. As evidenced here, this process fails to capture at least some misinformation-containing Facebook posts.

Therefore, it can be easily argued that we cannot precisely quantify the amount of incorrect information shared on Facebook that is undergoing verification. What is undoubtedly true is that the remaining misinformation continues to attract significant reach.

In 2018, Facebook claimed that “when a Page surpasses a certain threshold of strikes, the whole Page is unpublished”, i.e. taken down. We could not find clear evidence whether this guideline has been updated since its publication two years ago. However, at the same time Facebook seemed to have double standards when it came to high profile pages that generated revenue for the company. In particular, again in 2018 Facebook communicated the existence of the so-called “Cross Check” mechanism, which delegated content moderation decisions to Facebook staff rather than to their contracted moderators in cases of high profile or popular pages.

While Facebook may seem “too big to moderate” (it isn’t!), at least some of its moderation failures are likely to arise from its approach that some pages are simply “too big to fail”.

The continued absence of an accessible archive of Facebook content takedowns makes it impossible for independent third-parties to evaluate the efficacy of Facebook’s counter-misinformation policies and the consistency of their practical application. While Facebook does provide some basic transparency reports, they lack the necessary detail, such as the precise reasons and policies for each action taken, to make such third-party evaluations viable.

Hydroxychloroquine ads on Facebook

Using Facebook’s ad library, we also studied over 90 HCQ Facebook ads which circulated in the United States in August 2020.

Common themes included:

- Politicians advocating for the reinstatement of HCQ as COVID-19 treatment;

- Politicians attacking opponents for their support of HCQ;

- Promotion of political petitions, advocating the reinstatement of HCQ as COVID-19 treatment;

- Ads by media organisations, promoting HCQ-related news;

- Faith-based organisations taking a stance on the use of HCQ as COVID-19 treatment.

What some of these examples demonstrate is that political and issue-based advertising is another key channel of potentially harmful misinformation on Facebook. However, the company has announced that no action would be taken to prevent politicians from making false claims in paid advertising, unless the ads contain “hate speech, harmful content and content designed to intimidate voters or stop them from exercising their right to vote”. The fact that none of these advertisements have been disallowed by Facebook indicates that at present the company does not consider HCQ and COVID-19 treatment misinformation in political ads as harmful content, despite their COVID safety policy which states that they aim at “combating COVID-19 misinformation across our apps”.

In conclusion, as we argued in the major new ITU/UNESCO study on disinformation, co-authored by EU DisinfoLab, internet communications companies urgently need to ensure their content moderation and curation responses are appropriately transparent and measurable, support human rights, and are implemented equitably. This means avoiding exceptions being granted to certain kinds of users or content, and for these rules to be applied on a truly global scale. As it stands, this is not yet the case.