By Trisha Meyer and Alexandre Alaphilippe

- We publish two detailed timelines of Facebook, Google, TikTok and Twitter’s responses to COVID-19 and US elections related disinformation: with the data organised per platform and per response type.

- Online platforms heavily emphasize amplification of credible COVID-19 related information of the World Health Organization and other public health authorities, including through provision of free advertising space

- They also rapidly and regularly expanded their policies, especially on Misleading and Harmful Content, to ban, remove, demote or label harmful but not illegal disinformation

- In 2020 the role of online platforms in content curation became visible as never before. Their ability to react quickly is both encouraging and worrying, if not accompanied by a known hierarchy of principles and stringent transparency and review measures

As we approach the one-year mark on the COVID-19 pandemic, we take a close look at how online platforms have responded to health and political disinformation on their services.

You can directly download our two timelines, compiling these updates by platform and by response type.

Methodology and dataset

For this article, we reconstructed a timeline of responses of Facebook, Google, TikTok and Twitter, on the basis of reports submitted to the European Commission as part of the Fighting COVID-19 Disinformation Monitoring Programme, as well as updates posted on their company blogs.1

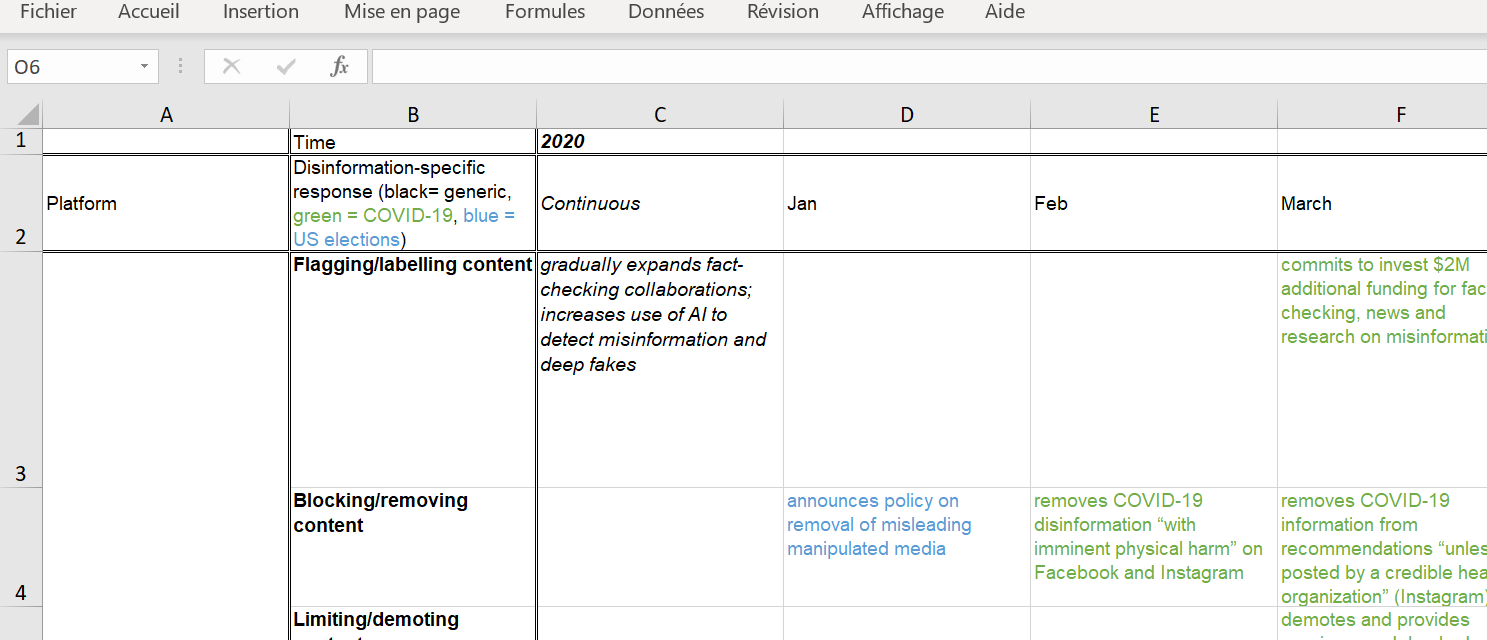

We mapped their measures by month and against the platform disinformation response typology we had developed as part of our contribution to the UNESCO/ITU Balancing Act report. In particular, we divide platform responses into four types of ‘content’ moderation: flagging/labelling, blocking/removing, limiting/demoting and prioritizing/amplifying; and four types of ‘other’ moderation: specific to accounts, advertising, users and research/review.

We are quite proud of our detailed comparative timeline, but it became a slight monstrosity 👾 and impossible to capture in one image. The figure below shows a snippet of our mapping of the first two content moderation types. We kindly invite you to consult our online resources to see our two full timelines in all their glory. We publish two timelines with the data organised per platform and per response type. There is so much more packed into these timelines than we can recap in this brief article.

Key highlights and take-aways

By golly, has it been a busy year!

As the pandemic hit, we saw online platforms heavily emphasize authoritative content provided by public health officials through in-app notices, educational pop-ups and prompts, launching dedicated hashtags and educational centers, and surfacing credible public health information at the top of feeds and in COVID-19 related searches. Six months later similar action to prioritize authoritative content was taken in preparation of the US elections, and nine months to counter vaccine disinformation. One relatively novel development was the provision of free advertising credits to the World Health Organization (WHO) and public health authorities. Google, Facebook and Twitter also provided large grants for journalism and fact-checking.

In parallel, and prominently used during the run-up and in the aftermath of the US elections, platforms regularly updated their policies related to Misleading and Harmful Content, Sensitive Events, Civic Integrity to ban, remove or demote content and ads that contradicted public health guidance and undermined confidence in the elections. Efforts to counter QAnon led Facebook to expand its Dangerous Individuals and Organizations Policy to include organizations tied to violence in August 2020. Much later, in January 2021, Twitter updated its Coordinated Harmful Activity Policy. Infamously, both platforms permanently suspended President Trump’s accounts in January 2021 for inciting the violence at the Capitol Hill riots.

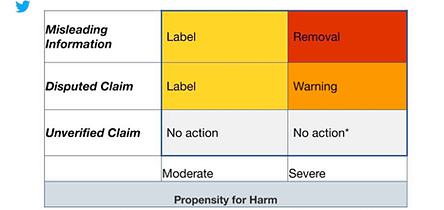

In 2020, platforms also extended their use of warning messages and stickers to flag potentially misleading content, caution to share further and point to credible information. In February 2020, Twitter started taking action against synthetic and manipulated media, and in May, against potentially misleading COVID-19, elections (and vaccine) related content (see figure below). Slightly later, in June 2020 Facebook started labelling state-controlled media, and in July, the accounts of political candidates and federal officials.

Platform-specific responses to COVID-19 and US elections related disinformation

Facebook (Facebook, Messenger, Instagram, Whatsapp)

- Facebook was busy, with a frenzy of activity in February and March as the COVID-19 pandemic broke out; a steep ramping up of US elections related activities as of June; and a gradual response in preparation COVID-19 vaccine rollout as of September

- Facebook’s COVID-19 response emphasizes the prioritization of authoritative content, free advertising for public health agencies and the demotion of debunked information. They remove COVID-19 related disinformation with ‘imminent physical harm’

- Facebook’s US elections response focused on policies related to political and social issues ads and policies that allow for removal of content that interfered with or suppressed voting. They also added warning messages to debunked content

“In total, we removed more than 265,000 pieces of content on Facebook and Instagram in the US between March 1 and Election Day for violating our voter interference policies.”

“Between March 1 and Election Day [we] displayed warnings on 180M+ pieces of content debunked by third-party fact-checkers that were viewed on Facebook by people in the US.”

Source: Facebook US 2020 Elections report

Google (Search, YouTube, AdSense)

- Google’s COVID-19 response was gradual, starting with the prioritization and amplification of accurate COVID-19 related content and free advertising credits for public health authorities. Notably they also published a COVID-19 Medical Misinformation policy and expanded their Harmful Health Claims policy to remove content that contradicts authoritative and scientific consensus on the health crisis

- Google’s US elections response focused on security and amplification of trusted news

- Ad related policies are a powerful tool for Facebook and Google to wield. Both Facebook and Google temporarily paused US elections ads after the polls closed

TikTok

- TikTok’s COVID-19 response started earlier than other platforms and was concentrated in time (Jan-March). A similar approach was followed for vaccines in December. It stresses information prioritization and amplification through in-app notices, stickers and brand takeovers. In October TikTok launched Project Halo, a science communication effort, to raise awareness and confidence in vaccines

- TikTok’s US elections response was also concentrated in time (Aug-Oct) and focused on an in-app guide and public service announcements. During the month of October through Election Day, they provided daily updates on their election response

- TikTok does not allow political ads. Similar to Facebook and Google, it donated ad space to public health authorities

- TikTok announced that they will add friction to their disinformation response arsenal. When they identify a video with unsubstantiated claims, Tiktok will show a banner warning and include several warning prompts before viewers share a flagged video

- In 2020 Twitter played an extensive editorial role on its platform, through use of labels, warnings, removal, reducing visibility, adding friction, promoting authoritative content

- As part of its COVID-19 response, Twitter broadens its policy definition of harm to include content that contradicts COVID-19 public health guidance. In February and May, they also provided guidance on their staged approach to manipulated and synthetic media and potentially misleading content

- There was a frenzy of activities in the lead up and aftermath of US elections on content and account level. In December, Twitter reported that their more extensive version of friction (Quote Tweet rather than Retweet; removing ‘liked by’ and ‘followed by’ recommendations, only surfacing ‘additional context’ trends) did not bear expected results

“We’ll no longer be prompting Quote Tweets, and are re-enabling the standard Retweet behavior. We hoped this change would encourage thoughtful amplification and also increase the likelihood that people would add their own thoughts, reactions and perspectives to the conversation. However, we observed that prompting Quote Tweets didn’t appear to increase context: 45% of additional Quote Tweets included just a single word and 70% contained less than 25 characters.”

Source: Twitter 2020 Election update

In conclusion, in response to game changer events such as the pandemic or the use of disinformation by US President Trump, online platforms concentrated their responses on improving their content curation efforts. They took unprecedented measures to minimize harm. Some policy updates were clearly planned, such as Twitter’s graduated response to synthetic and manipulated media, while others were quick responses to ongoing events. This rapid expansion of disinformation policies culminated in bans of President Trump and Parler on multiple platforms in January 2021.

This ability to react quickly is both encouraging and worrying. Encouraging, because it demonstrated the platforms, under society pressure, behave as a public interest utility in specific cases. Yet at the same time, it is worrying as the overall majority of measures taken are not addressing the rooted causes of the architecture of information distribution. Without this discussion, it is feared that discussions around censorship and its abuses will take over on the work needed to build a more inclusive and pacified information ecosystem.

As first steps to address this issue, the EU DisinfoLab is proposing

- To implement meaningful transparency on online content distribution, which includes auditing of algorithms, a reinforced transparency on the online advertisement ecosystem and transparent processes on content promotion/demotion;

- To empower civil society (academics, researchers, journalists, CSOs) and independent regulators in their role of enforcing accountability of online actors;

- To sanction bad faith actors especially when there will be repeated efforts to escape transparency and accountability from society.

1. We used the sources below to map the platform responses on a month-by-month basis. This was not always a straightforward exercise, and we would be very happy to rectify any error you may spot! We did not include company updates related to support for health workers, small businesses, non-profits, children, social movements, communities, mental health, emotional well-being or diversity, as these were not specific to combating disinformation on the platforms.

All: monthly platform reports from August 2020 for the European Commission Fighting COVID-19 Disinformation Monitoring Programme

Facebook: Facebook Coronavirus Newsroom updates, Facebook US 2020 Elections report, Facebook Key Elections Investments and Improvements timeline

Google: Google Keyword COVID-19 updates, Elections Google updatesTikTok: TikTok Safety Center – COVID-19, TikTok Safety updates, TikTok Integrity for the US Elections

Twitter: Twitter Blog, Twitter Coronavirus updates, Twitter Blog Elections tag