On the 16th October, EU DisinfoLab hosted its monthly webinar with Michael Bossetta – who is a postdoctoral researcher at Lund University. His research interests centre around social media platforms, their use by politicians and citizens during elections, and their exploitation by governments to conduct cyber espionage. Michael produces and hosts the Social Media and Politics podcast, which is freely available on any podcast app. You can follow him on Twitter (@MichaelBossetta) and the podcast too (@SMandPPodcast).

Digital Architectures

Michael began by arguing that political communication on social media is mediated by a platform’s digital architecture – the technical protocols that enable, constrain, and shape user behaviour online. Broadly speaking, this relates to what online platforms allow us to do, what they allow us not to do, and how it shapes the way we communicate online – whether that’s through likes, comments, retweets, sharing, etc. In Michael’s study, he broke the digital architecture of social media platforms into four distinct categories:

- Network Structure is how connections between accounts are created and maintained;

- Functionality is how users can engage with the technology;

- Algorithmic Filtering is how content is filtered by algorithms;

- Datafication is how our interactions are datafied into points that can be modelled.

The way the platforms are coded and designed influences the ways in which political actors communicate. To really illustrate this, Michael used the example of Snapchat to show how its hardware affects how users communicate. Within this, he posed a simple question: what is the implication of Snapchat only being available as a smartphone app?

To explain this, he used the example of an event on Snapchat and Instagram from Marco Rubio’s 2016 presidential campaign trail. Michael explained that, with Instagram, you can take content, filter it, and release it at a strategic time point. Political campaign teams, therefore, have greater control over how their candidate is presented.

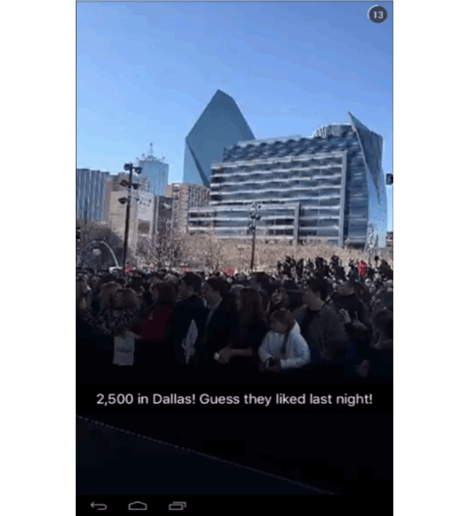

Michael went on further to explain that, from an information point of view, when you have a more real time platform like Snapchat, do you actually have time to accurately communicate information? In view of this, as the two screenshots show, the number of supporters differ between the two posts. The supporters were counted at 2,500 at the event on Snapchat, and then afterwards, when the campaign uploaded their total tally to Instagram, it had increased by 1,500 people. Therefore, using Instagram gives campaigns the time to gather accurate information.

Social Engineering – Phishing 2.0

After explaining this, Michael presented his first case study that looked at how susceptible Americans are to being spear-phished or clicking on disinformation during an election. Michael was inspired by how U.S. government employees were targeted on Twitter particularly by Russian, Iranian, and Chinese hackers through spear-phishing. Michael chose to conduct his experiment on Twitter, simply because its architecture is a “phisher’s paradise” (Chhabra et al, 2011). But, how so?

- Very easy account automation;

- Open APIs and network structure, which are conducive to open source investigation;

- Short URL support that hides the ultimate destination of a link;

- @Mention notification for users outside of your network;

- News-oriented content profile, meaning that there’s a lot of news on the platform, so it’s easy to hide disinformation on the platform.

Following this decision, Michael used the Python programme SNAP_R and fed it a list of Twitter users. These profiles were then scraped for their recent tweets and the programme then generated personalised content based off the tweets. In particular, if Twitter user had a lot of tweets on the topic of sports, the programme created content to reflect these very interests. See below for the tweet’s structure:

@mention + generated content + URL to click

To make the Twitter account ‘authentic’, Michael based the account’s page on the Washington Post. In light of the U.S. 2018 midterms, Michael selected users who were politically expressive and distinctively partisan. Tweets were accordingly designed to reflect these views in a format like below:

Overall, 138 users were included in the study. Out of these 138 users, approximately only 3/138 verified the source of the account. One distinctive result of study revealed that spearfishing susceptibility cross cut ideology and devices. In other words, left wing and right wing Twitter users were more or less equally susceptible to spearfishing. Moreover, it didn’t make a difference whether the user engaged with the tweet using a mobile device or via a desktop.

Networked Disinformation – Black Trolls Matter

Michael’s final case study focused on the use of sockpuppets by the IRA to spread disinformation on Twitter during the 2016 U.S. election. Sockpuppets impersonate accounts of citizens; they are internet trolls who hide themselves behind identities that mimic certain nationalities or certain ethnicities. Michael argued that the information shared by these IRA sockpuppets seemed to be more persuasive since the accounts mimicked your friends or people who came from the same ethnicity or socioeconomic status.

In their study, Michael and his colleagues developed a model of network disinformation to show how this mechanism worked in the case of the IRA on Twitter.

The process starts with an internet troll creating an account using a fake identity, i.e. a sockpuppet account. Whatever content is then shared by this sockpuppet is then disseminated to the account’s friends and followers. Michael affirmed that this is where you see the effect of disinformation, because these people got information from someone they knew and trusted (who had been tricked or socially engineered by the sockpuppet).

Michael and his team categorised the accounts into 10 different types, building upon the previously work conducted by Linvill and Warren (2018). Their results were similar, yet more nuanced; a key difference was that Michael and his team had created the ‘black activists’ category.

This categorisation was important for looking at the tweets from the IRA accounts. The ‘black activist’ category was not so numerous in terms of IRA activity. However, when Michael and his team looked at the tweets that got at least one reaction, they found that 70% of ‘black activist’ tweets got likes and over 70% of them were retweeted. This was followed by right wing trolls, left wing trolls, and then fake news accounts.

Further statistical analysis found that identities on the left correlated with the most retweets. They found that the strongest predictor for getting retweets from IRA accounts was the ‘black activist’ category. Michael pointed out an interesting dynamic in that, although the ‘black activist’ sockpuppets weren’t that numerous in numbers, they were still strongly correlated with getting retweets. Intriguingly, ‘fake news’ accounts did not correlate with high engagement, whereas all three identities (black activist, right wing, left wing) did.

Michael ended his presentation highlighting the fact that we should be looking at identities rather than fake news accounts. This is because Twitter users reacted the most to the sockpuppet accounts than the fake news accounts. He later said ‘when trying to measure disinformation by looking at information from fake news outlets, you’re probably under estimating the amount of disinformation online, and you’re probably not looking at where the effective disinformation is’.

Further links:

- Digital Architectures – The Digital Architectures of Social Media: Comparing Political Campaigning on Facebook, Twitter, Instagram, and Snapchat in the 2016 U.S. Election.

- Case Study 1 – A Simulated Cyberattack on Twitter: Assessing Partisan Vulnerability to Spear Phishing and Disinformation ahead of the 2018 U.S. Midterm Elections.

- The Weaponization of Social Media: Spear Phishing and Cyberattacks on Democracy