Did you know that personalisation algorithms influence what you chose yesterday, what you will choose today, and what you will choose tomorrow?

Have you ever wondered about the nature of information that social media like Facebook is promoting through personalisation algorithms?

These and many other questions were raised and clarified during the webinar hosted by EU DisinfoLab on 20th March 2019 with our guest Claudio Agosti, founder of Facebook Tracking Exposed (FbTrex). During the Webinar we discussed how Facebook personalisation algorithms can diminish our ability to make informed decisions.

What are personalisation algorithms?

First of all, personalisation algorithms are tools that help to customise information. It is a process aimed to collect, store and analyse information about site visitors in order to deliver customised information at the right time. Based on user identities and interactions, specific bits of information are preferred while other are hidden from their view. By deploying this filtering mechanism, Facebook ensures that each user has a meaningful experience of the service, instead of dealing with an unorganised information flow. However, the intentions of these algorithms are beginning to be questioned.

How do they affect our ability to make informed choices?

FbTrex comes into play here. It is an open source tool, part of a research project that has created a browser extension that collects data on user personalisation algorithms. The data collected help researchers assess how current filtering mechanisms work and how personalisation algorithms should be modified in order to minimise their potential dangerous social effects. The main idea behind using the FbTrex browser is to show which kind of content is being pushed to its user.

During the webinar session, Claudio described why the personalisation algorithms are a political matter and how the “filter bubbles” can decrease user’s ability of making informed choices. In the current debate around misinformation during the upcoming European elections, even though Facebook and Google claim to be neutral (or eventually abused by third party), the algorithms used by these platforms are promoting a certain and specific kind of content to the users.

Beside the filtering content and advertising, Facebook also has ‘viral contents’: posts that appear on your feed because of your friends’ interaction. Here, again, the platform decides for the user on which kind of content to show. In this case, both users and content creators should be aware of the consequences of their activities online and of the information that is being personalised for/by them.

Facebook black box

An experiment with a group of students using the FbTrex browser extension has shown that, although users follow the same news media, each of them gets a filtered version of the content. Within the framework of the experiment, some users shared or viewed certain publications while others were simply scrolling the timeline. One of the findings of the experiment was the fact that the media page followed by three students did not promote the same articles in each of their timelines. More accurate methodologies have been used in a test made in Argentina and in Italy.

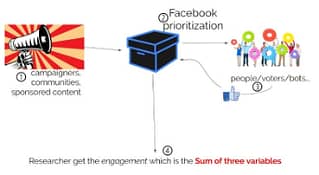

Often, the algorithm analysis is made using the engagement as metric, however, the way the engagement is calculated is not totally clear. The process is as follows: first, the content is created, and it goes to the black box of Facebook who then decide if it should appear in the timeline of users. Then, the users may engage in liking, commenting or sharing the content, and at the end, a researcher takes the result and calculates the engagement. Here, we witness the sum of three different variables with their respective responsibilities.

The role of FbTrex is to analyse the content created in the black box of Facebook, and what Facebook considers to be relevant for that specific user. This way of studying the algorithm is one of the bases of unveiling the logic behind personalisation of the news feed for each user.

FbTrex goal is to show why the algorithm is an issue and empower Facebook users to better understand why they are shown specific content. Ultimately, they believe that every individual has a right to control their own algorithm.

As a first step, for FbTrex to be able to properly analyse Facebook algorithms, they request Facebook to start using a standard form for communicating data to their clients.

Claudio Agosti is a self-taught hacker who, since the late 1990s has gradually become a techno-political activist as technology has begun to interfere with society and human rights. In the last decades, he worked on whistle-blower protection with GlobaLeaks, advocated against corporate surveillance, and founded facebook.tracking.exposed. He is a research associate in UvA, DATACTIVE team.

Image credit: www.thoughtcatalog.com